AI-mindful boards

Navigating the technological landscape in fulfilment of fiduciary duties

As the technological landscape rapidly evolves, boards are faced with the challenge of harnessing the power of artificial intelligence (AI) while fulfilling their fiduciary duties. Dr Agnes KY Tai, Director of Great Glory Investment Corporation and Senior Advisor of iPartners Holdings Ltd, delves into the best practices for utilising AI and highlights the essential discussions boards need to engage in regarding this powerful technology.

Highlights

- identifying skills gaps within the board, management, business units and the workforce is key to effective AI integration

- boards must stay current with evolving regulations and safeguard against AI-related risks, including fraud risk

- while AI can provide valuable insights, boards remain ultimately accountable for all decisions, whether AI-assisted or not

While the foundations of AI go back to the 1950s and 1960s, it was only about a decade ago that advances in deep learning and neural networks accelerated the use of AI and generative AI (see ‘What is the difference between AI and generative AI?’ below) across diverse industries. Today, AI is everywhere. It is transforming manufacturing processes and data use practices across many sectors of the economy. It is in healthcare, self-driving cars, chatbots, virtual assistants, facial recognition systems and the algorithms used by online platforms to analyse user preferences and behaviour. AI is also now a common tool in the creative industries.

The increasingly widespread use of AI has significant implications for board oversight and accountability. Boards must establish effective governance frameworks for AI initiatives, aligning them with the company’s strategic objectives and ethical standards. While AI can provide valuable insights, boards remain ultimately accountable for all decisions, whether AI-assisted or not. Maintaining oversight of risks and opportunities, as well as upholding full accountability, is crucial to ensure responsible and effective AI adoption.

boards must establish effective governance frameworks for AI initiatives, aligning them with the company’s strategic objectives and ethical standards

What should be on the board’s agenda?

Business model

Boards need to assess how AI can keep their businesses relevant and thriving – understanding the cost of not utilising AI is vital. Identifying skills gaps within the board, management, business units and the workforce is key to effective AI integration. Boards should explore how AI can provide a sustainable competitive edge in areas such as problem-solving, imagination and effective use of prompt engineering. Nevertheless, boards should also consider whether any pivoting of the business model to better exploit the potential of AI aligns with the company’s purpose, principles and core values.

Strategic integration

Boards, if they have not already done so, will need to commence discussions on policy adoption for AI integration and risk mitigation. Evaluating resource requirements and potential outcomes across various strategic options is also essential. Developing a metrics-based strategic plan that enhances the business model, increases productivity, improves operational processes and customer experience, optimises the supply chain and enables data-driven decision-making is paramount.

The latest AI regulations

Given the evolving regulatory landscape, boards need to stay informed about new regulations such as the European Union (EU)’s AI Act that was published in June 2023 and China’s provisional regulations on AI usage that came into effect in August 2023.

After being voted on by the EU Parliament on 13 March 2024, the EU’s AI Act will undergo final approval in April. It will take effect after 21 days, with prohibited systems provisions in force by the end of 2024. Additional provisions will be implemented gradually over the next two to three years. The Act requires prior authorisation for the use of real-time remote biometric identification systems. The Act also imposes significant penalties for non-compliance with the prohibited systems provisions, with fines of up to €35 million or 7% of global turnover.

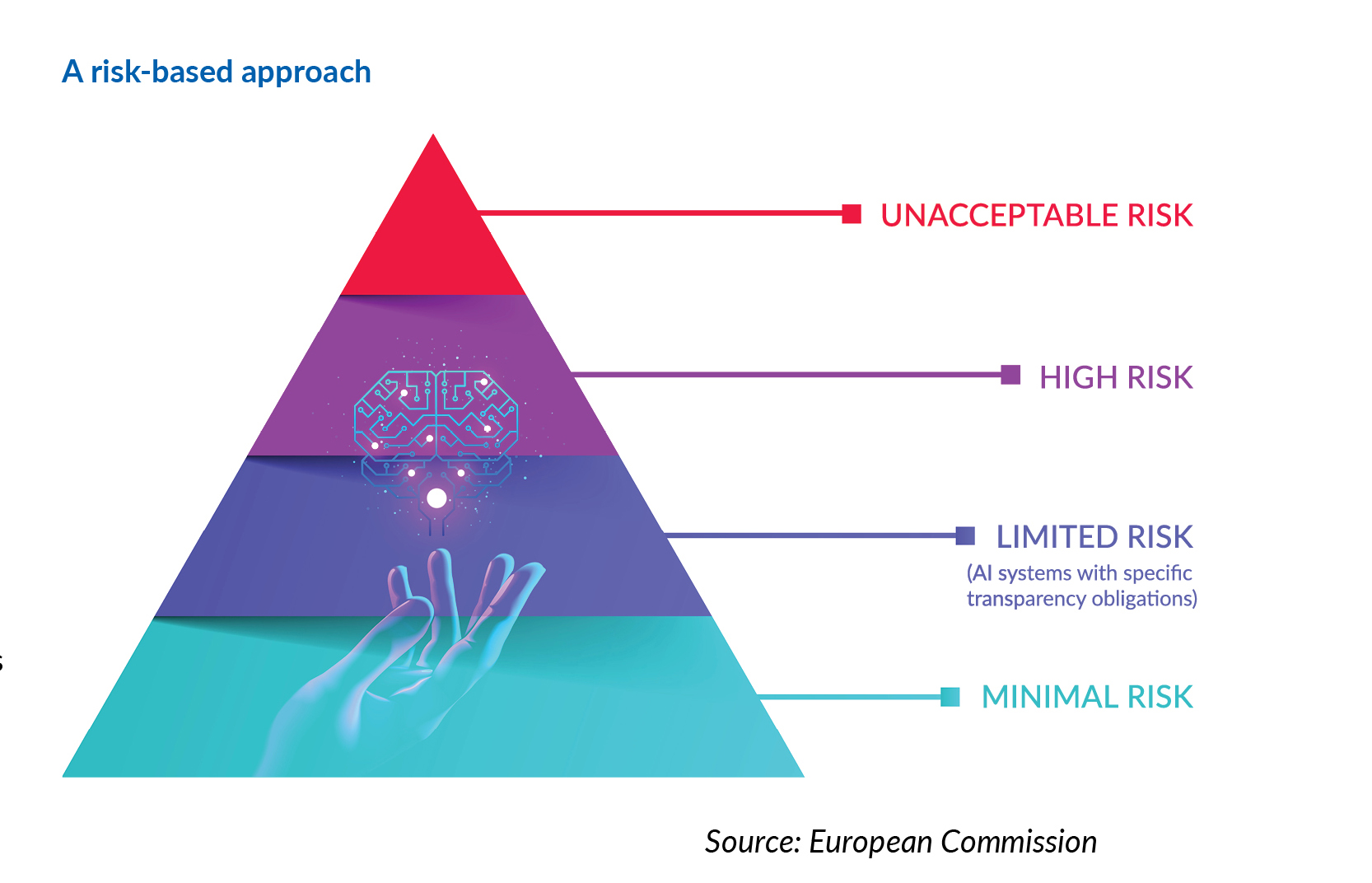

The EU regulatory framework defines four levels of risk for AI systems (see ‘A risk-based approach’) based on the potential threat posed by these systems to the safety, livelihoods and rights of people.

- Systems deemed to carry the highest level of risk (‘unacceptable risk’) will be banned.

- Operators of systems deemed to be ‘high risk’ will be required to observe strict obligations – including ensuring adequate risk assessment and mitigation systems, appropriate human oversight and high levels of transparency.

- For systems deemed to carry ‘limited risk’, the Act focuses on ensuring adequate transparency. For example, humans should be made aware that they are interacting with a machine when using a chatbot and AI-generated content (text, audio and video) will need to be identified as such.

- The Act permits unrestricted use of systems deemed to be ‘minimal risk’ and the vast majority of AI systems currently used in the EU fall into this category.

In the Chinese mainland, the Interim Measures for the Management of Generative Artificial Intelligence Services (Interim Measures) were published on 13 July 2023, following a three-month consultation period. These measures, which came into effect on 15 August of that year, were developed through the collaborative efforts of seven key central government ministries and agencies. The measures specifically pertain to the provision of generative AI services to the public in China.

Any breach of the Interim Measures will result in penalties in accordance with the applicable laws and regulations of China. These include, but are not limited to, the Cybersecurity Law, the Data Security Law, the Personal Information Protection Law and the Law on Progress of Science and Technology of the People’s Republic of China. Consequently, criminal sanctions may be imposed if the violation constitutes a crime. In cases where the violation impacts public security, administrative sanctions related to public security will be enforced.

What is the difference between AI and generative AI?

When it comes to AI and generative AI, definitions matter. There are many misconceptions about how these technologies work and what they can achieve. It is important to state at the outset that neither AI nor generative AI achieve a comprehensive simulation of human intelligence. AI systems in use today are generally trained to perform specific tasks, and they lack the broad range of complex reasoning, abstract thinking, common-sense understanding, creativity and emotional intelligence exhibited by humans.

Generative AI, as a subset of AI, refers to a class of algorithms and models that are designed to generate new content, such as images, text, music and other forms of media. Both AI and generative AI systems are trained on large datasets, but the latter has the ability to learn patterns and structures to create content not found in the training data.

Responsible use and risk management

Robust governance around data privacy, security and ethical AI usage is critical. Boards and governance professionals have to make sure that internal controls are sufficient to manage risks associated with AI deployment, including cybersecurity and regulatory compliance.

There was a dramatic demonstration of the importance of this in mid-January 2023, when a finance department employee of a multinational firm’s Hong Kong office was scammed using deepfake technology. In a virtual meeting, digitally recreated versions of the company’s Chief Financial Officer and other colleagues instructed the employee to make 15 bank transfers to five bank accounts involving a total of HK$200 million (US$25.6 million).

This deepfake scam was a first in Hong Kong involving such a large sum of money that was unlikely to be covered by insurance policies. In the wake of this case, police have promoted various tactics that employees and others can use to detect whether a fake digital recreation is being used. These tactics include asking colleagues in virtual meetings to move their heads and to raise questions that only the actual company executives would be able to answer. Boards must address fraud risks, as exemplified by deepfake AI scams, highlighting the need for comprehensive insurance policies.

Talent and adaptation

Board members and governance professionals should prioritise upskilling themselves in AI know-how to effectively guide talent strategy. They can play a pivotal role in providing employees with relevant AI training and fostering a culture of continuous learning. Boards must raise awareness of the risks of inputting sensitive information on public AI platforms and ensure that the organisation is prepared to integrate and capitalise on AI technologies.

Conclusion

While AI-related risks such as misinformation, disinformation and cyberattacks persist, boards that approach AI adoption with an open mind and a commitment to continuous learning will fare well. By carefully assessing the crucial aspects of AI and generative AI technologies, implementing robust risk management practices and empowering management with adequate resources, forward-looking boards can guide their organisations towards sustainable business growth in the AI era.

Dr Agnes KY Tai PhD CCB.D SCR®, ESG Investing FRM CAIA MBA FHKIoD

Director of Great Glory Investment Corporation and Senior Advisor of iPartners Holdings Ltd

Previous CGj articles by Dr Tai are available on the journal website: https://cgj.hkcgi.org.hk.